Being on PiWars (not-so-official-but-still-the-place-to-be-to-talk-to-Mike-and-Tim) Discord server is really paying off. So many same minded people sharing new and interesting stuff. One of which ended up being SL's 'VL53L5CX' time of flight sensor. Similarly to previous variants 'VL53L0X' and 'VL53L1X', 'VL53L5CX' has up 8x8 zones but unlike predecessors, those sensor values are exposed. It feels like the perfect match for Flig! Thanks to Pal, we've got three pairs of tiny breakout boards made by ST themselves.

With 4 rows of 4 zones with up to 63º of field of view. If we set sensor at such position that first - top row is 'looking' horizontally directly in front of Flig and other three rows look at three different distances at the floor - we can get it to tell us distant wall's angle and if there are some obstacles in front of Flig, at which distance and direction of them.

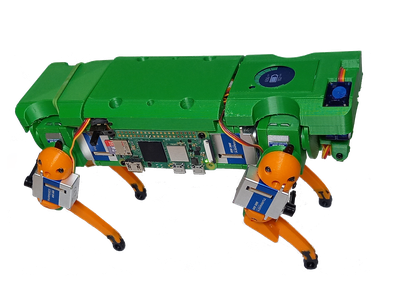

Here is first prototype:

One thing that came out strange while coding for the sensor was that one would expect that all zones 'look' at angles as they would through a lens. Consequence would be that when sensor is pointed directly at the wall - middle zones would be 'closer' while edges furthest away from the sensor. But, it turns out that while that is true for rows - top row and bottom row show values further out from the sensor while two middle rows show values closer to the sensor, horizontally it seems that sensor compensate for the angles so outside zones show exactly the same value as inside zones. Strange.

Now, after doing some gentle trigonometry and splitting sensor to top row for 'wall' angles and bottom three rows split in four 'distances' - each with three values (vertical 'slots') showing how far some obstacle is or how 'flat' the ground is.

Here is example when Flig looks at the wall:

Here is example when Flig looks at the wall:

Flig is turned to the wall directly and then slowly rotates left and right. We can see on the sensor that software can detect the angle of the wall in relationship to the robot.

From top down we can read:

- wall angle

- wall distance (the closest part of the wall to the robot)

- and number of points that form straight line (4 in this case). Code can detect wall angle with only two points but that is not precise enough as with three or four.

- then we have distance of the 'ground/obstacles' at 45º, 22º, -22º and -45º angles

- last set of variables show what is the perceived angle of the floor to robot

On this example we can see Flig going from looking at the wall at some angle, to turning towards the corner and then going through the corner until it looks squarely at other wall of the corner.

That way, we can 'detect' we are approaching corner and we can use corners to turn around at 90º quite precisely. All Flig needs to do is to look at a wall at 0º (actually Flig would be really perpendicular to the wall but here we are using sensor's orientation and 0º means that sensor is parallel to the wall).

When Flig is at 0º to the wall it would start turning to desired direction and observing angle of the wall. The moment angle goes over 20-ish degrees and 'corner' appears on the 'radar', it would wait until wall 'angle' reaches -20º - which would mean it was past the corner itself. At that point it would just continue turning until we reach 0º (from negative side really) again and stop.

That way Flig can go through the arena which is 1500mm x 1500mm - pretty much in target distance of VL53L5CX - with quite a good precision. That is planned for Hungry Cattle Challenge

Last bit to ensure precision is ability for sensor to swivel 90º one side or the other. That ability would give Flig option to move forward (while constantly correcting itself keeping wall at 0º) and then when we reach appropriate distance (let's say 110mm from the wall - as troughs are 100mm x 100mm), it can turn sensor to one side and adjust laterally to correct distance (in case moving forward made it slightly miss the position).

F. L. I. G.

F. L. I. G.

Comments

Comments powered by Disqus