The Sheep, The Wolves and The Fence

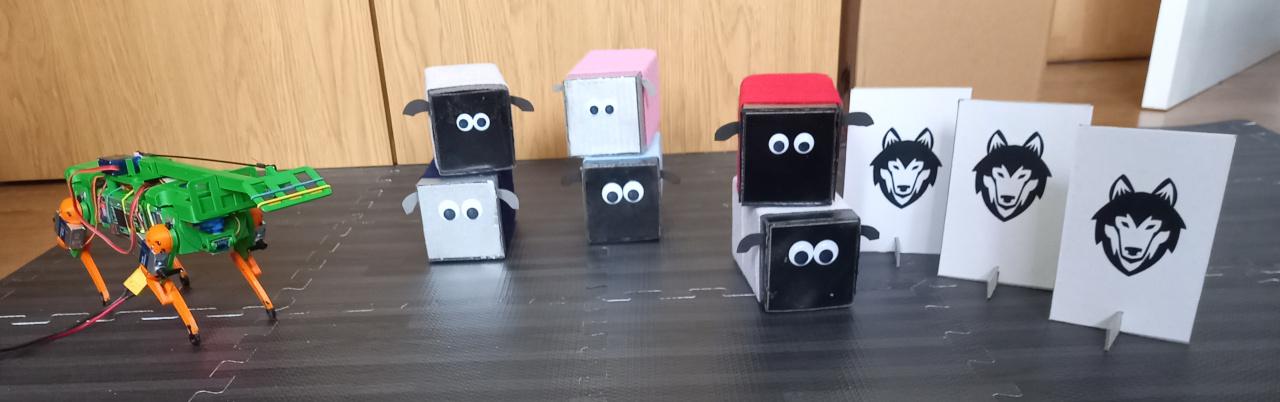

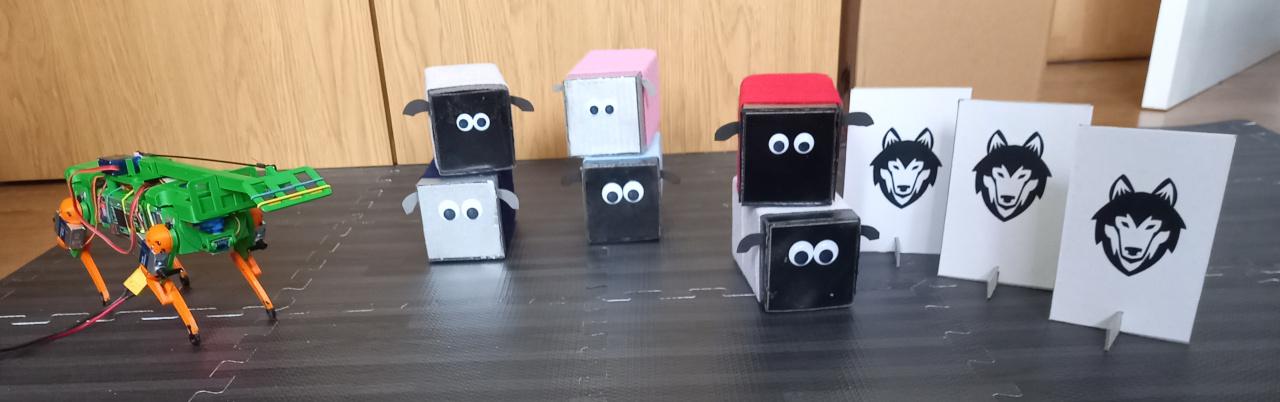

Next challenge was Shepherd's Pi - perfectly suited for Flig - Flig really likes acting and role of a sheep dog and took great pleasure in this challenge. Even the sheep enjoyed it, too!

Next challenge was Shepherd's Pi - perfectly suited for Flig - Flig really likes acting and role of a sheep dog and took great pleasure in this challenge. Even the sheep enjoyed it, too!

At the start of this project, I decided to build a robot arm with a “classic”, articulated design: a rotating base, with “shoulder”, “elbow” and “wrist” joints. I wanted to make something that’s suitable for picking apples, but not specific to this task, something that could maybe even used or developed further in the future for other applications.

To be able to reach any point in any orientation of the end effector (or gripper), we need seven degrees of freedom: this means seven servos for joints + one more for operating the gripper. We don’t necessarily need that to be useful though. If we’re happy with limited orientations, we can get away with less.

As with every year, this one brought a distraction. Much shorter and more important than those from previous years, but still welcome getting away from solving hardware and, even more so, software problems.

This time round I decided to set up a special rig to tape challenges. Requirements would go along the lines:

If your day job title is some variation of “developer” (maybe as opposed to “programmer”!), chances are that you spend more time on figuring out how to use various libraries and frameworks and how to “glue” things together, rather than creating a lot of novel code from scratch or inventing things. Doing Pi Wars can be an escape from all this, with complete freedom to experiment – which also means that reinventing the wheel is perfectly fine! But the opposite can also be true: figuring out what existing building blocks you need and how to fit them together efficiently to achieve a goal can be an interesting puzzle in itself. And creating from scratch “yet another” variant of everything that’s been done by others before can also feel wasteful after a while.

Troughs for Hungry Cattle are 100mm x 100mm and 50mm high. Also, the goal of the challenge is to fill at least half of each trough. In order to help judges, we were asked to mark inside of the trough half way to the top so it can be clearly seen that it was filled over that mark. With 2 colours printer it was easy - all I had to do is to make 1mm high stripe all across insides of the trough.

Since Flig has its inseparable companion Dinky (sometimes invisible for human eyes), input controller needed

to be split between two at some occasions. For instance when they work together and there's some Humans input

needed. Simple idea was to dedicate two, otherwise completely neglected buttons of PS4 controller, namely

'Share' and 'Options', to switch whole controller functions to two. Not only switch controller functions but

send digested values from controller to two different MQTT topics:

Since Flig has its inseparable companion Dinky (sometimes invisible for human eyes), input controller needed

to be split between two at some occasions. For instance when they work together and there's some Humans input

needed. Simple idea was to dedicate two, otherwise completely neglected buttons of PS4 controller, namely

'Share' and 'Options', to switch whole controller functions to two. Not only switch controller functions but

send digested values from controller to two different MQTT topics: joystick/changes and joystick/arm.

But, how to find out which mode we are in currently? One way would be to 'test' some movement and see what is moving - Flig or Dinky's robotic arm, but that's not quite good.

Now we can go and try to do some work on the PiWars challenges. The first one that seems simple enough to solve autonomously is Hungry Cattle. Since we have a distance sensor which can detect wall orientations (over troughs and other potential obstacles on the floor of the arena), we can easily devise a plan on how to move about and get to the right places.

Not everything went well so far. Not getting ICM-20948 to work with Flig so far was the greatest disappointment so far. First mistake I've done was to bury it inside - over strong, metal cased servos (with strong magnets in motors). Original idea was to use the sensor's compass for absolute orientation. Compass is inherently very slow and noisy sensor input, but if needed to tell us general 90º orientations it could work well. With VL53L5CX doing fine refinements within couple of degrees of a wall Flig looks at, ICM-20948 was supposed to give rough orientation - tell us which wall it was looking at. But, results of reading compass in room full of electromagnetic emissions and in place I've just described didn't work.

Being on PiWars (not-so-official-but-still-the-place-to-be-to-talk-to-Mike-and-Tim) Discord server is really paying off. So many same minded people sharing new and interesting stuff. One of which ended up being SL's 'VL53L5CX' time of flight sensor. Similarly to previous variants 'VL53L0X' and 'VL53L1X', 'VL53L5CX' has up 8x8 zones but unlike predecessors, those sensor values are exposed. It feels like the perfect match for Flig! Thanks to Pal, we've got three pairs of tiny breakout boards made by ST themselves.

With 4 rows of 4 zones with up to 63º of field of view. If we set sensor at such position that first - top row is 'looking' horizontally directly in front of Flig and other three rows look at three different distances at the floor - we can get it to tell us distant wall's angle and if there are some obstacles in front of Flig, at which distance and direction of them.

In my mind, simple 'trot' gait, as in previous video is a way of 'cheating'. Robot does nothing smart. No way of being stable, measuring passed distance or being able to lift legs higher to go over different terrain.

So, search for fix for existing gaits continues.